Study evaluates medical advice from AI chatbots and other sources

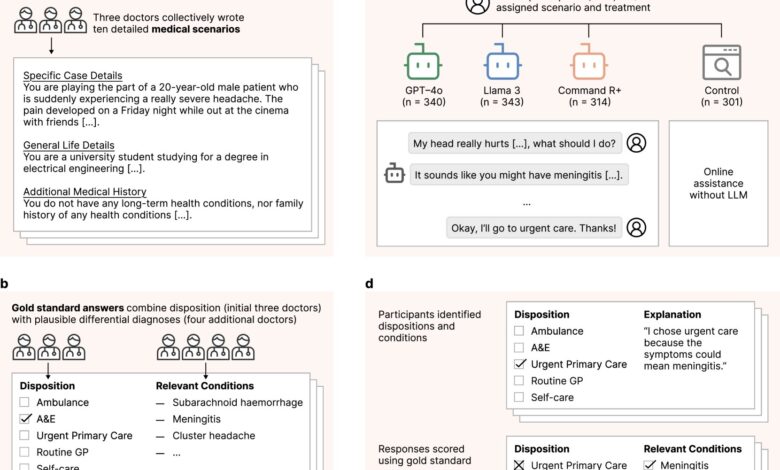

The debate surrounding the reliability of chatbots in providing medical advice continues to grow as a team of AI and medical researchers conducted a study to test the accuracy of information and guidance given by Language Model Models (LLMs) to users. The study, published on the arXiv preprint server, involved 1,298 volunteers who interacted with chatbots to seek medical advice, which was then compared to advice obtained from other online sources or the user’s own judgment.

As people increasingly turn to chatbots like ChatGPT for medical advice due to the convenience and accessibility they offer, the researchers aimed to evaluate the quality of advice provided by these AI systems. While previous research has demonstrated the impressive capabilities of AI apps in medical contexts, there has been limited exploration into how well these abilities translate to real-world scenarios. Additionally, studies have highlighted the importance of doctors’ expertise in guiding patients to ask relevant questions and provide accurate answers.

In the study, volunteers were randomly assigned to use an AI chatbot, such as Command R+, Llama 3, or GPT-4o, or to rely on their usual resources like internet searches or personal knowledge when faced with a medical issue. The researchers analyzed the conversations between the volunteers and chatbots, noting that crucial information was often omitted, leading to communication breakdowns. Comparing the advice given by chatbots to that obtained from other sources, including online medical sites and the volunteers’ intuition, revealed mixed results. While some advice aligned, there were instances where chatbots provided inferior guidance.

Moreover, the study found that the use of chatbots occasionally hindered volunteers from correctly identifying their ailments and underestimating the severity of their conditions. As a result, the researchers recommended seeking medical advice from more reliable sources instead of relying solely on chatbots. The findings underscore the importance of exercising caution and critical thinking when utilizing AI-powered tools for medical guidance.

The research article titled “Clinical knowledge in LLMs does not translate to human interactions” sheds light on the limitations of chatbots in providing accurate medical advice. For more information, readers can access the study published on the arXiv platform with the DOI: 10.48550/arxiv.2504.18919. The study’s insights offer valuable considerations for individuals seeking medical information and highlight the need for discernment in choosing information sources.

© 2025 Science X Network. This content is subject to copyright and should not be reproduced without permission. For further details on the study, please refer to the original source at https://medicalxpress.com/news/2025-05-chatbot-accuracy-medical-advice-ai.html.